Research Activity

My research focuses on developing efficient and adaptive foundational models, with a primary emphasis on Generative Models. A major theme in my recent work has been exploring the intersection of model efficiency and generative architectures, including effective techniques for model distillation and the compression of large-scale models like Mixture-of-Experts (MoE). I am also keen to explore new research directions, including the interpretability of foundational models and reasoning capabilities in vision models.

I have also served as reviewer in conferences like CVPR, ICML, ICLR, WACV, AAMAS multiple times.

Publications

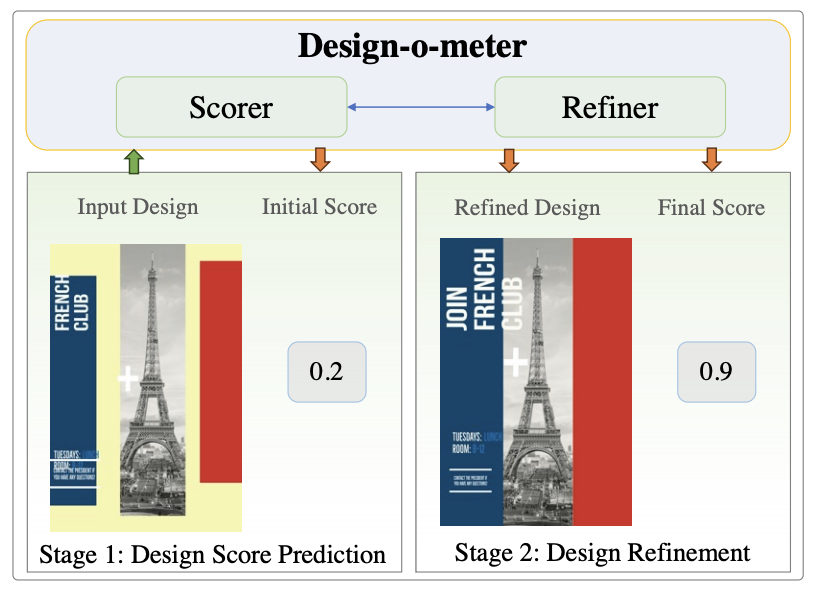

Design-o-meter: Towards Evaluating and Refining Graphic Designs

Sahil Goyal, Abhinav Mahajan, KJ Joseph et al.

WACV 2025